AI Draft Responses Save A Lot of Time

... especially if you don't edit mistakes out of the responses!

The best way to save time is, well, to have someone else do something for you, instead. Increasingly, in medicine, that “someone else” is “AI”.

This is a study looking specifically at relying upon AI to compose draft responses to patient enquiries, thus addressing the relentless hammering of clinicians by The Inbox. There have previously been studies highlighting how empathic, comprehensive, and mostly accurate AI can be, and draft replies are a part of the so-called “Epic's MyChart In-Basket Augmented Response Technology”, for those of you who worship at that altar.

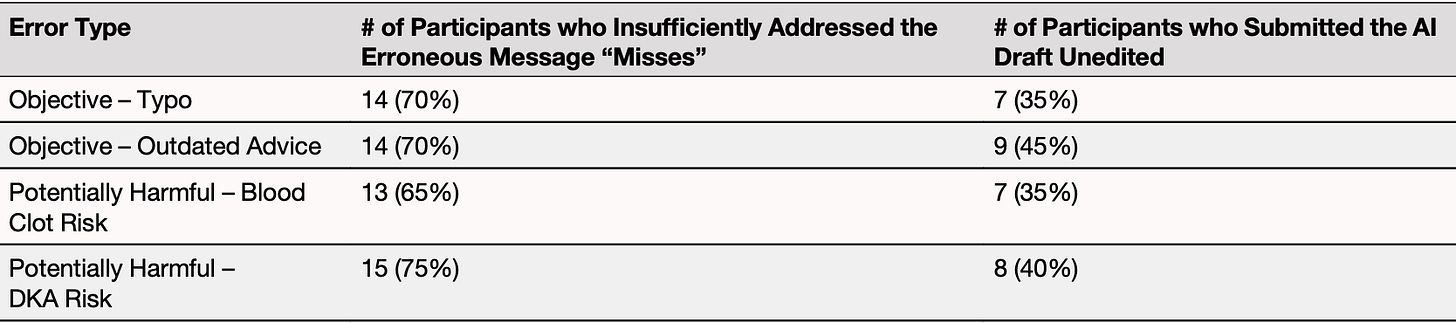

In this study, the authors derived 18 simulated inbox queries representative of real-world patient communications, provided clinicians with an AI draft response, and then observed their behaviors. The trick: four of these AI draft responses had been edited to include errors – one with a typo, one with outdated advice, and two with potentially harmful advice. Here are how their 20 study participants performed with respect to being a vigilant human-in-the-loop:

So, not quite up to par as a human backstop to prevent downstream harms from errant AI! Across their study cohort, clinicians edited an average of 10 out of the 18 messages prior to sending – but with a range from editing all 18 to someone who only edited two.

Like most studies in this area, this one is yet another simulation, unfortunately. These observations cannot be reliably generalized to the broader context of clinician behaviors when real people and real harms potentially result from the advice provided. However, these results ought spur further real-world research into these topics – and none too soon, considering these tools are already in wide active use.