Did This AI Screener Really Decrease Opioid Use Hospitalizations?

It certainly badgered folks with more alerts, though.

Opioid use disorder is a real problem. The burden of manually screening hospital admissions to target addiction medicine resources is also a real problem. Let’s use AI!

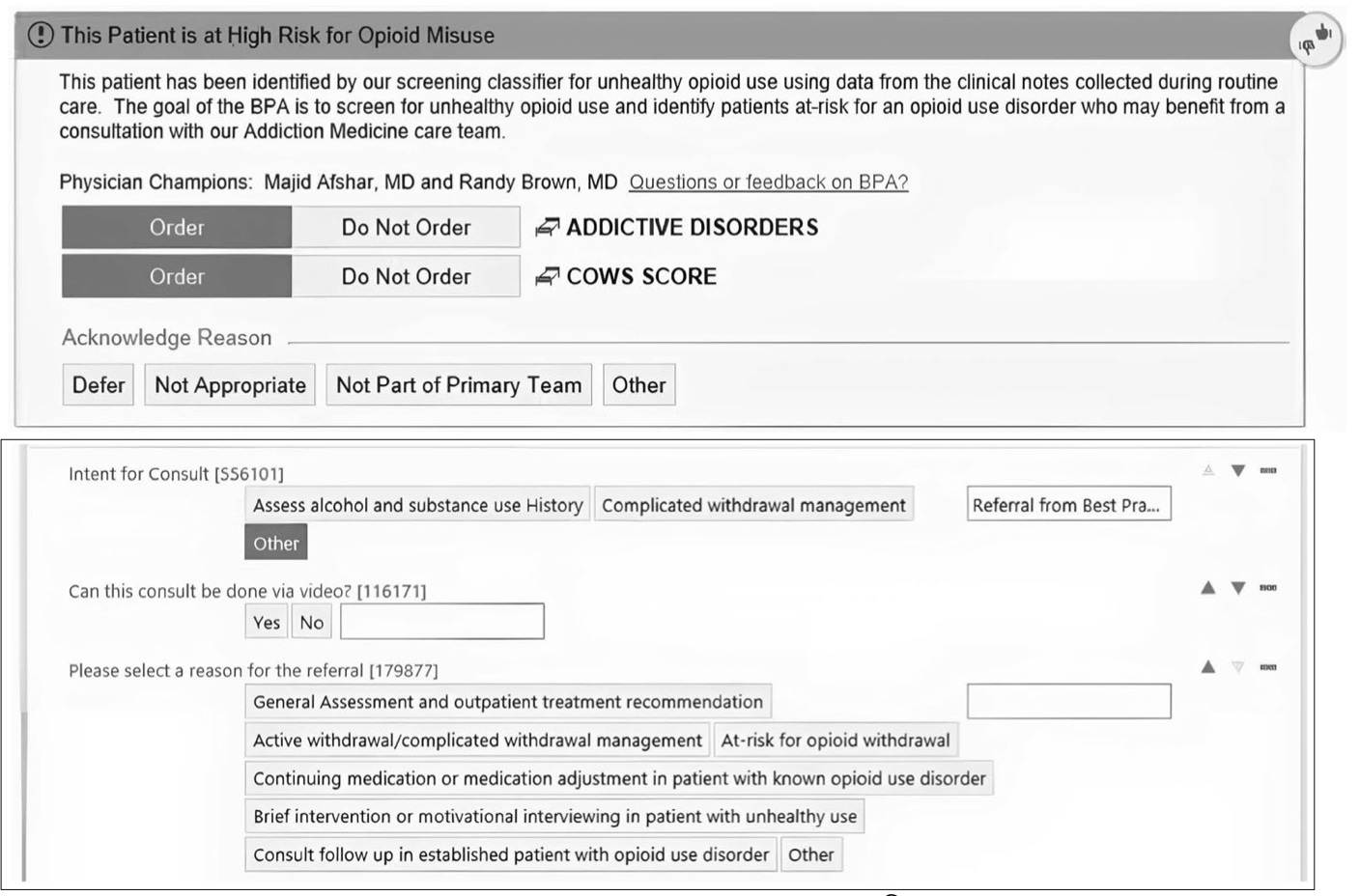

And, thus it became so – a model trained to read freetext notes for patients hospitalized for any cause and determine suitability for addiction medicine consultation. In such patients identified by their model, clinicians receive this helpful “best practice alert”:

This all seems potentially sensible – and, even better, in the pre/post design reported by this publication, the authors attribute a substantial drop in 30-day post-discharge hospitalization or ED visit, from 37.2% to 28.1%, in patients who received an addiction medicine consultation.

“Huge, if true”, as they say.

But, the publication does not reveal any useful clues as to whether these outcomes could be attributable to their intervention:

Virtually the same percentage of patients were evaluated by their addiction medicine service pre- and post-implementation (1.35% to 1.51%).

There are minimal differences between the reported pre- and post-implementation baseline characteristics of their addition medicine consultations. If anything, the post-implementation cohort is less-enriched by patients with coded alcohol and drug misuse disorders.

Virtually the same overall percentage of hospitalized patients were discharged with medications for opiate use disorder (0.71% to 0.76%).

Only 34 out of 267 consultations in the post-implementation phase were ordered via the BPA.

So, if the BPA was barely able to nudge patients into addiction medicine consultation – and there’s no clear indication these patients would have been “neglected” otherwise – and impact on management is not substantially different, there is simply no face validity for attributing the 30-day hospitalization findings to this BPA. Indeed, the extra 10% of patients with alcohol use disorder may be enough to tip the scales in favor of the BPA cohort.

The authors go on to mention their model was “cost-effective”, but that entire argument is mooted if the clinical impact is cannot be believed. The one clinical impact that is clearly believable, though: the extra 4,238 triggered alerts, 90+% of which were dismissed without even an acknowledgement.