Doctors Don't Report What Patients Tell Them?

... or is it really just lost in translation.

This wasn’t the article I hoped it would be when I picked it up, regrettably. I was hoping for an article that was somehow capturing patient-reported symptoms from a clinical conversation, then subsequently comparing it to the pared down terseness of finalised clinician notes. However, this is a lot less insightful:

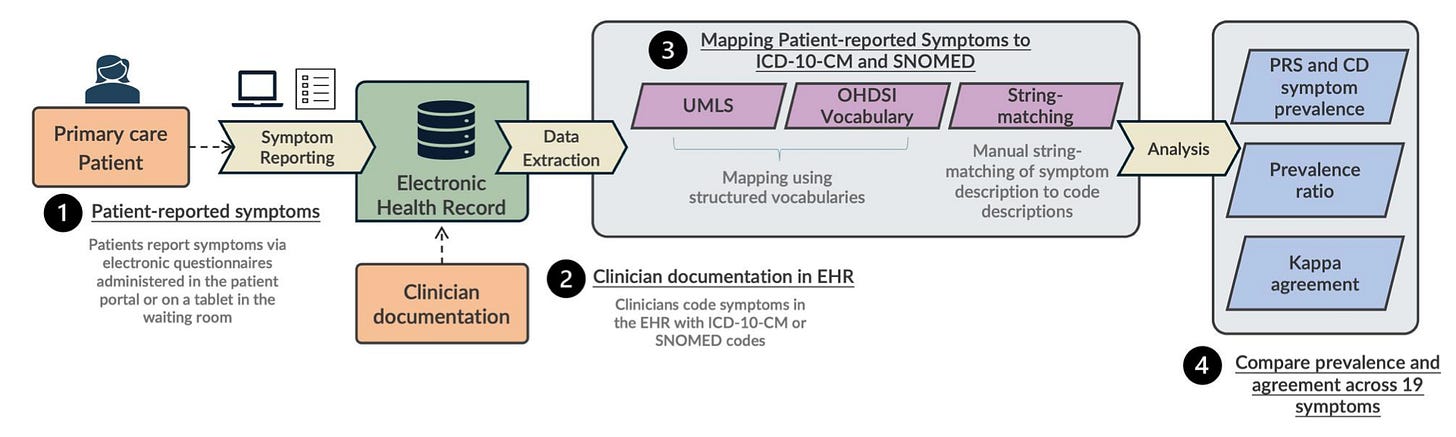

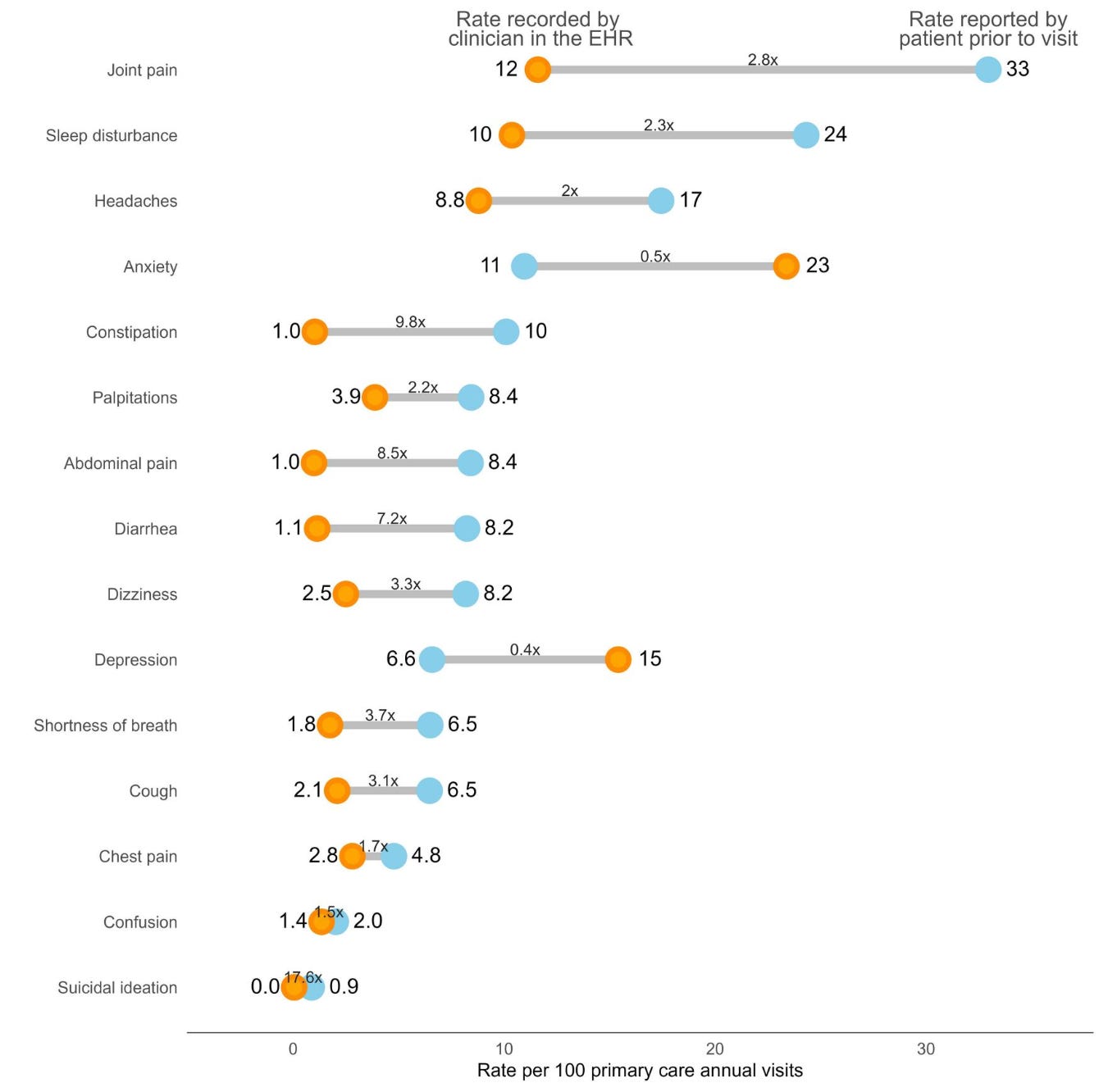

In this workflow, patients respond to a structured survey asking a range of questions regarding symptoms – basic constitutional complaints, the GAD-7, and PHQ-9. Then, these authors compared those positive findings with the structured diagnosis codes generated from each visit. They measured prevalence and agreement and, lo, there are discrepancies:

This doesn’t exclude the patient-reported symptoms from being mentioned in clinical documentation, however – just the ICD-10 or SNOMED coding related to the visit. This has dramatically fewer actual clinical implications and, rather, is of relative importance to researchers who are scraping the EHR for patient-reported symptoms as part of their studies.

I suspect, however, the increasing use of ambient scribing is going to increase the translation of patient-reported symptoms into clinical documentation, overall. Whether that ultimately translates to increased coding of these symptoms is another open question, but it ought to lead to an interesting line of investigation to see if the changes in documentation characteristics drives other downstream changes in physician behavior.