LLMs, Now Perceiving Time

Everything no longer happens everywhere all at once.

In health, everything is preceded by important events. However, when popping clinical histories and contextual information into general LLMs, the concept of “time” is lost.

Yes, general LLMs have connections in layers between concepts representing the “past”, and inputs can include time-based information, but this is different than having a transformer architecture specifically built to incorporate temporal attributes. There is an obvious need for such applications in health, such as this common scenario:

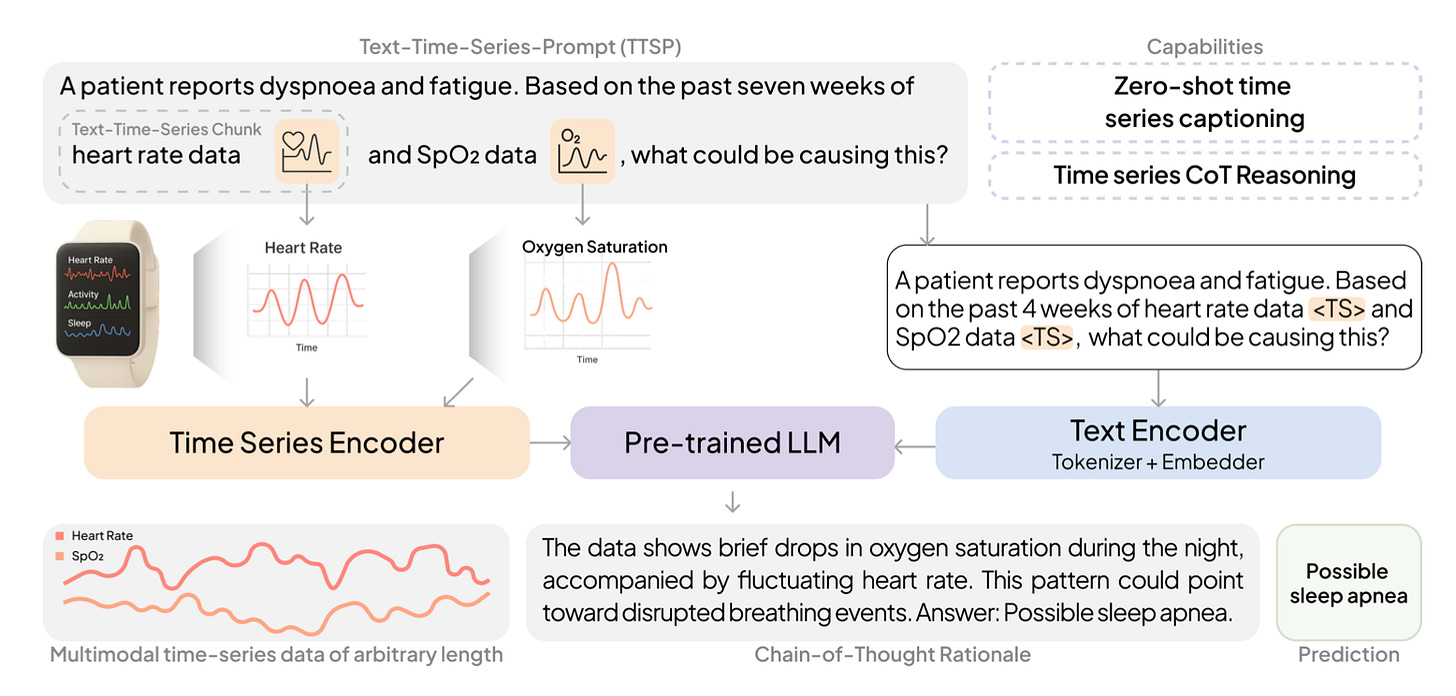

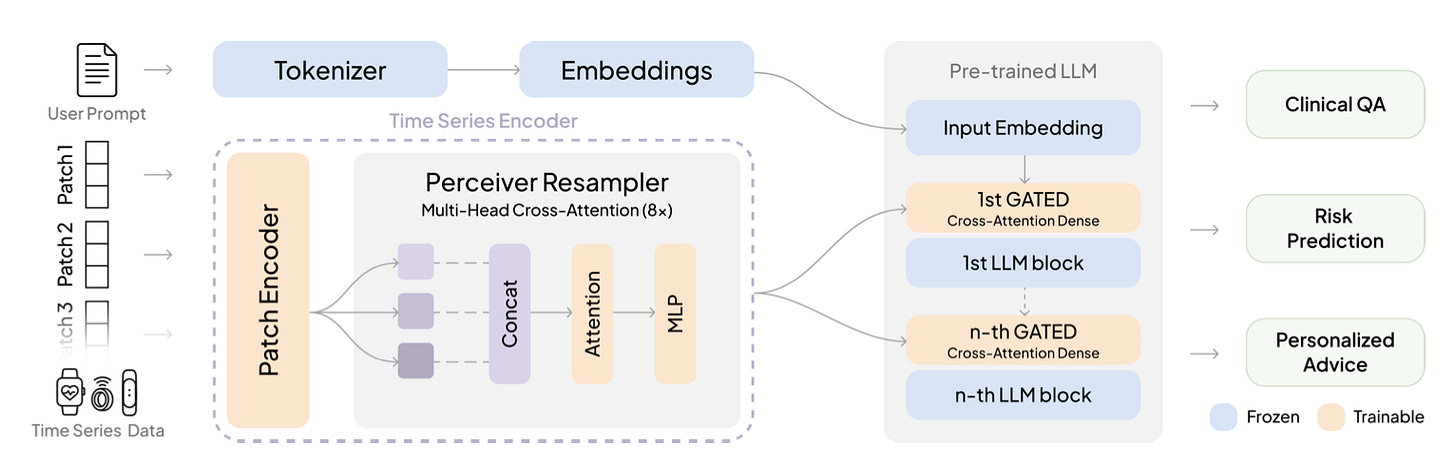

These authors demonstrate a modification to transformer architecture allowing for explicit encoding of time-based information – OpenTLSM-Flamingo:

… with advantages in performance, memory usage and compute time over traditional LLMs. I am out of my depth as a data scientist to evaluate their methods and double-check their math, but I certainly appreciate the effort from a conceptual standpoint.

Although interesting, this article is both poorly written and poorly edited.