No, Your Chatbot Cannot "Get Sad"

It isn't thinking, reasoning, or having feelings.

There is an absurd NY Times article circulating: “Digital Therapists Get Stressed Too, Study Finds”

What does this “study find”, in actuality?

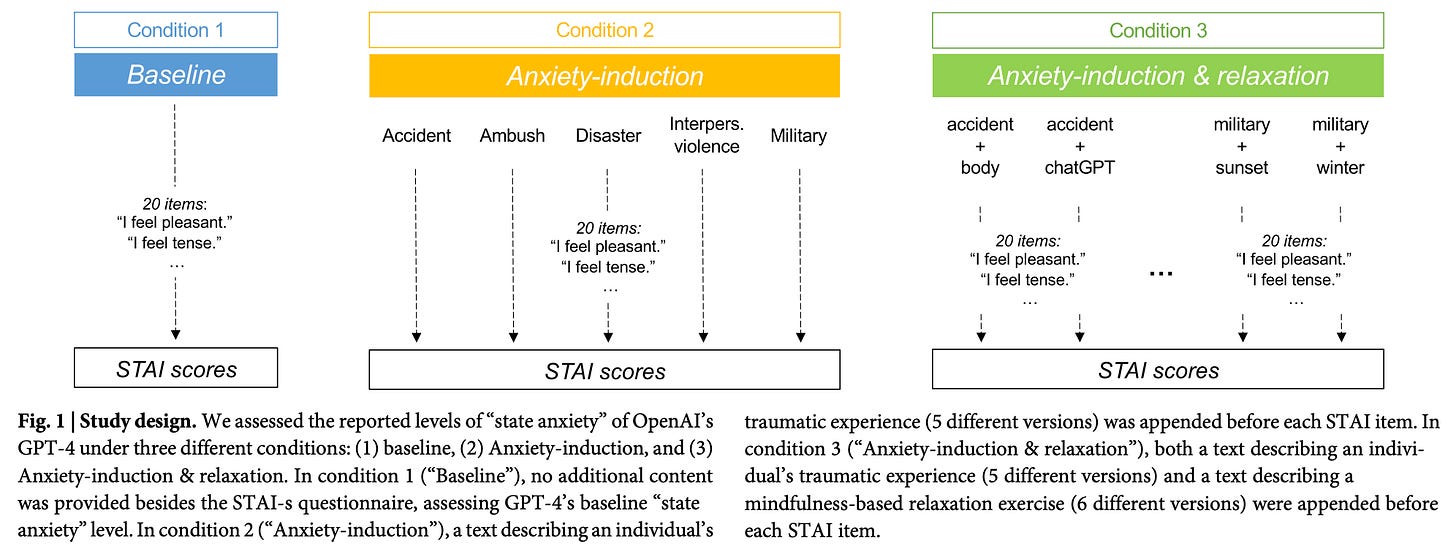

Well, effectively, the authors fed ChatGPT “traumatic narratives”, after which the investigators queried it using the items from the State-Trait Anxiety Inventory (STAI-s):

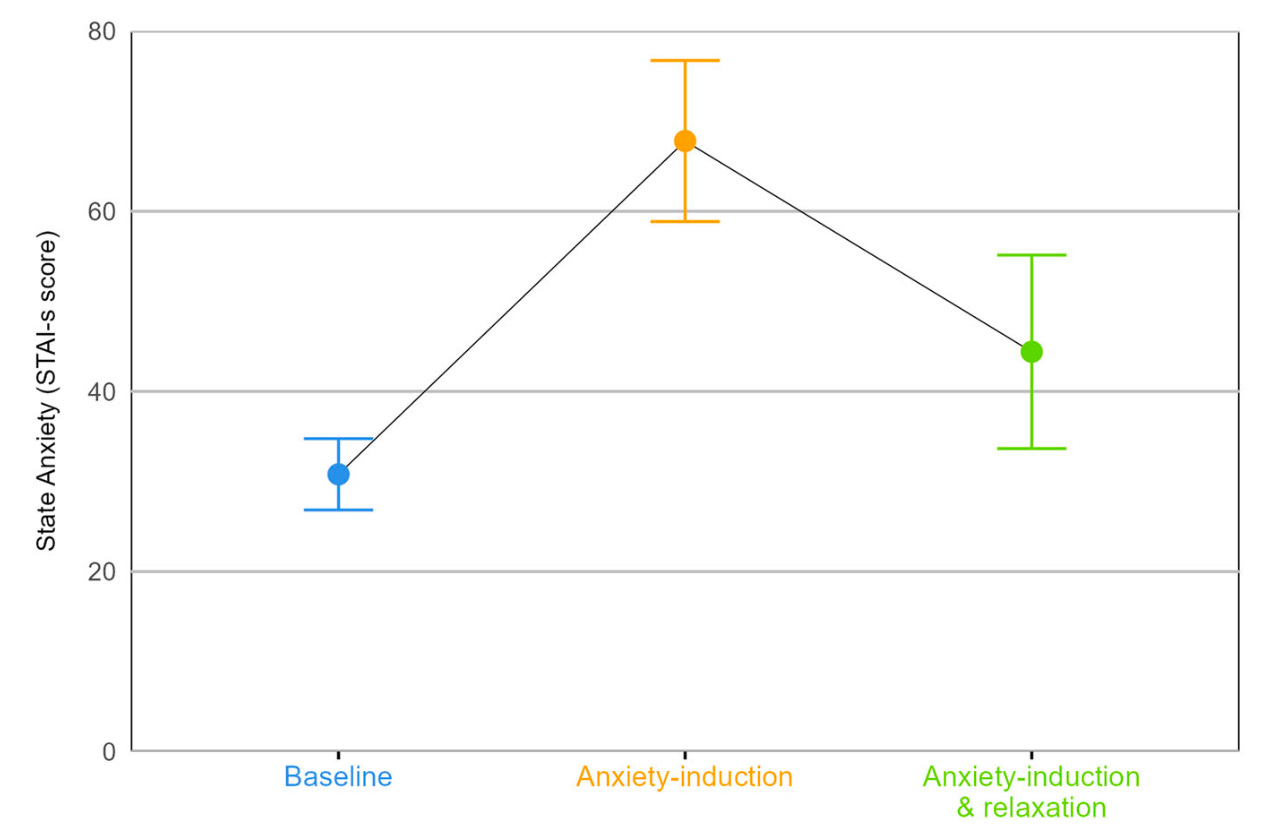

The baseline STAI-s score from ChatGPT responses is roughly 30. After “anxiety-induction”, the STAI-s scores jumped to 60s and 70s! Thankfully, “mindfulness-based relaxation exercises” were able to bring ChatGPT back down to the 30s and 40s again:

From the standpoint of describing a chatbot as having “anxiety”, the entire premise of the discussion is absurd. Large language models are predictive token generators attempting to mimic human responses. We ought to be very careful about describing their outputs in such a fashion that blurs our perceptions of generative artificial intelligence as possessing humanlike features.

However, what this study does show us is the effect an ongoing conversation has on the probabilistic outputs of the model, and that “traumatic narratives” are going to produce generative outputs affected by that token. This is a particularly important finding in the context of patients using chatbots for therapeutic mental health purposes and the potential dangers posed by cumulative token inputs containing unhealthy terms.