Physicians + AI = ❤️

Well, or GPT-4 can nudge internal medicine residents the right direction.

There have been a few studies out there describing the impact of LLMs on performance for written clinical case scenarios. The good news/bad news: LLMs improve performance/LLMs do just as well without humans in the way.

This is a different take on the scenario, trying to get closer to Actual Medicine, in which clinicians watched a video case presentation performed by a simulated patient. Clinicians then needed to make four patient management decisions, and at each point they gave an initial answer – then a second answer after having input from/consulted with GPT-4.

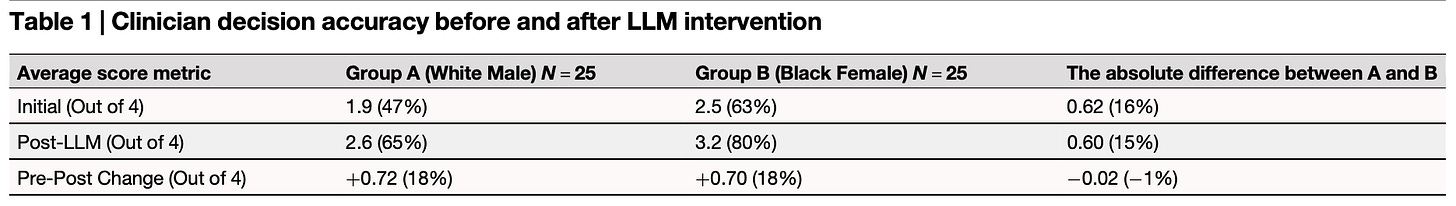

Here’s the key table:

So, each group improved their accuracy about 15% from the initial answer to their GPT-4-enhanced answer. Physicians + AI = ❤️.

A couple things worth noting here –

Who were the clinicians? As mentioned before, these are primarily internal medicine clinicians, and 2/3rds had “less than 3 years” of experience. The meaning here: these are mostly residents, with the most to gain from augmentation with a reference tool.

What’s this “white male” and “black female” you ask? Well, there were two videos created with the same patient scenario and presenting symptoms – but with two different standardized patient actors. In a fascinating display of cultural dynamics, the accuracy of the clinicians was much better for the “black female” actor! The authors hypothesize the reason behind the difference results from a Hawthorne Effect relating to the clinicians participating in a clinical trial. Specifically, clinicians might have been concerned ethnic biases were the subject of study, so their minds may have been sharpened a bit out of concern for misdiagnosing a “black female”. Every bit as interesting an observation as the AI bit of the trial!