Stanford's New Clinical LLM Benchmark

Because clinical tasks are not multiple choice USMLE questions.

We were all amazed when LLMs first hit the scene – and, even in the olden days of GPT-3, that they could perform passably (pun intended!) well on various medical licensing examinations.

Of course, anyone who has trained in medicine knows the USMLE questions are built in such a fashion they are primarily associative recall or pathognomonic pattern match. While the distance seemingly traveled over the past few years occasionally seems astounding, it has obvious face validity a statistical prediction engine would be able to statistically rank the correct answer above a handful of others.

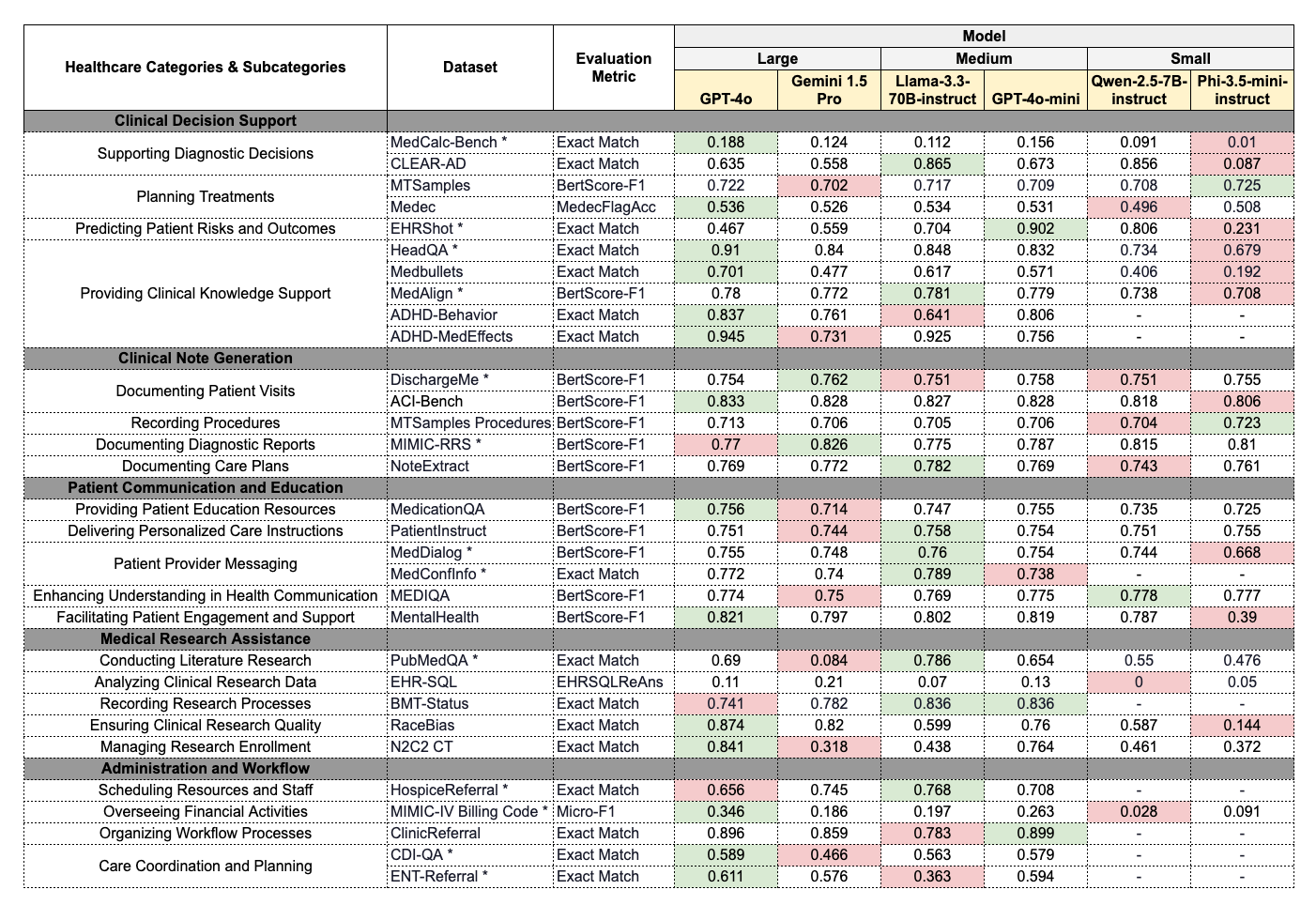

One candidate for the “next generation” – the Stanford MedHELM, a varied set of tasks upon which to test evolving LLMs. These range from sets of questions requiring calculations off a clinical case, prediction from clinical notes, to summarization, and beyond. The benchmark summary figure looks like this:

The benchmark authors go on to comment on some of the limitations of the evaluation metrics and room for improvement – the primary concern of the authors being the ideal fashion in which to evaluate natural language output in place of the BertScore-F1.

An interesting step on the path towards better, and more robust, evaluation in such a manner as to replicate actual clinical medicine.