The "Agent" General Practitioner

Gaps continue to diminish between AI and humans.

Google DeepMind has published their latest little pre-print puff piece describing progress with their approach to "agentic" LLM in medicine. The first wave of "chatbots" were primarily working with tuned LLMs, or by running clever tricks via prompting to extract better output.

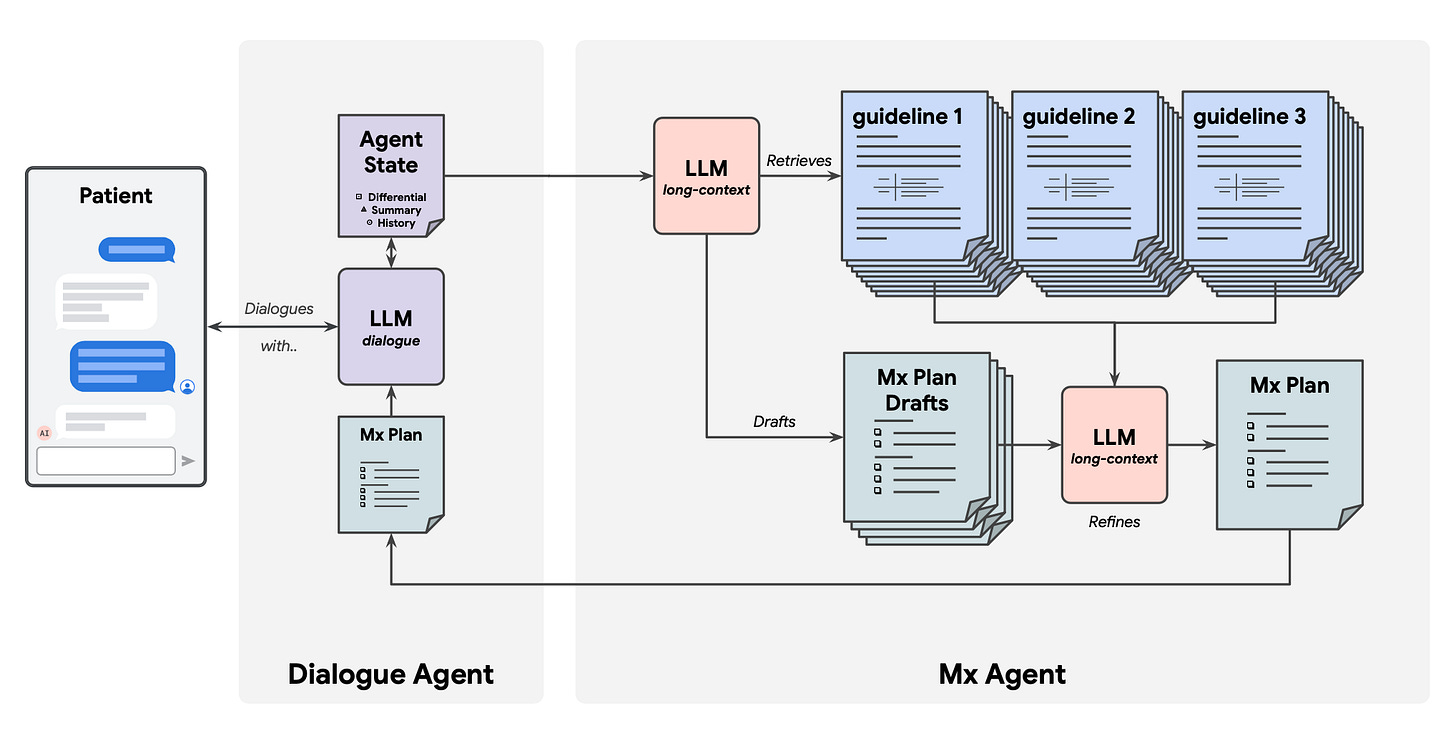

The state-of-the-art has moved forward, however, to agent techniques chaining together LLMs, each with specific roles within a problem context. In the Google demonstration, they use a "Dialogue Agent" with its own set of local memory and context to hold a responsive conversation with a patient. The conversational agent passes information gathered in conversation to an "Mx Agent", performing large-token size "reasoning" and tool access. The output from the "Mx Agent" flows back to the "Dialogue Agent" to guide the conversation.

The figurative representation looks like:

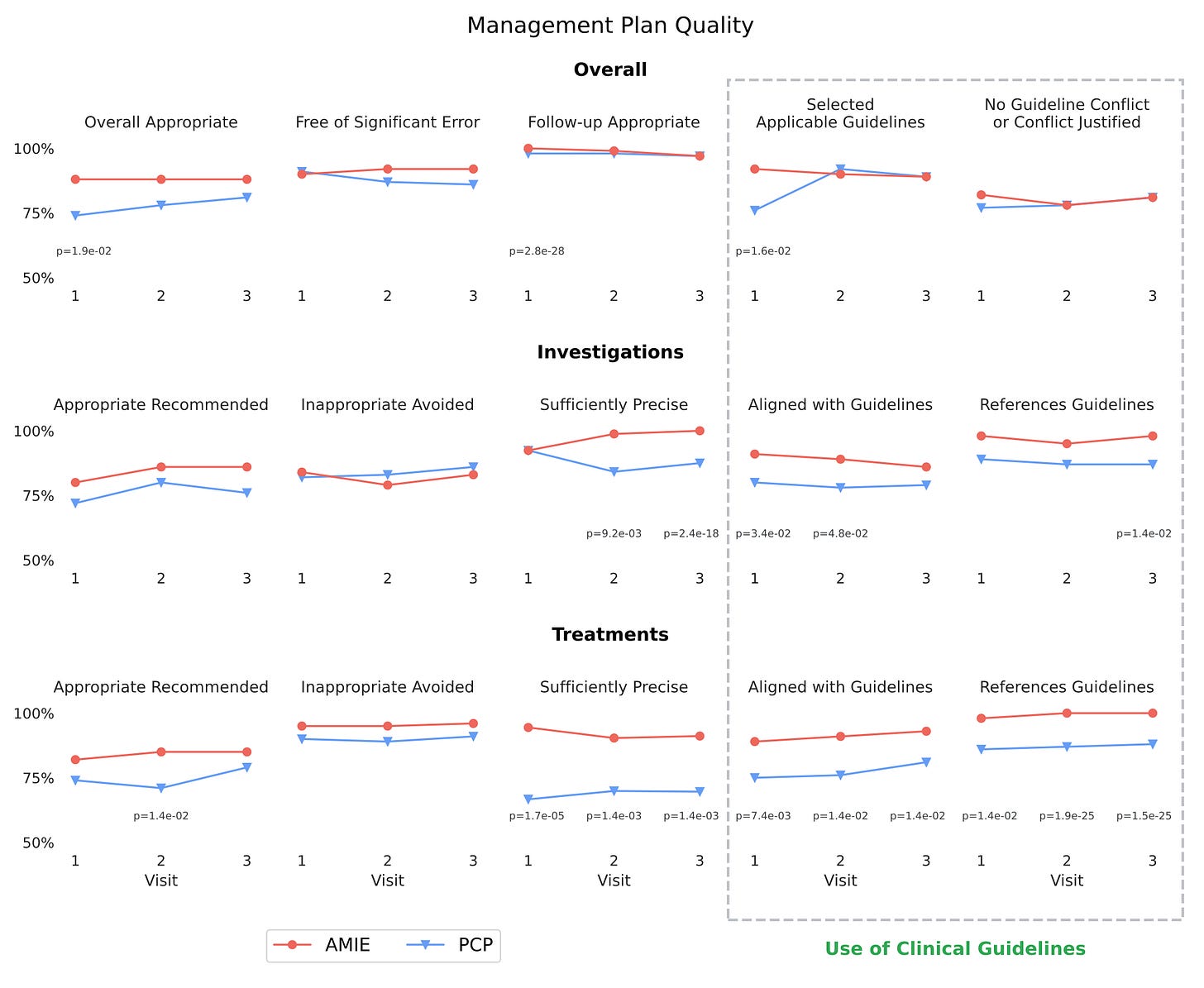

Performing a head-to-head trial with GPs, using simulated patient chat encounters over multiple virtual visits, agent performance compared favorably with humans:

It certainly seems – at the expense of computational resources – techniques are expanding the scope in which AI can offer performance comparable to human operators.