The LLM That Knows When You'll Die

Your death is just a token to predict, after all.

There are plenty of dramatic headlines circulating regarding this article, the "This AI tool predicts your risk of 1000 diseases" one going around, so I'll add to it!

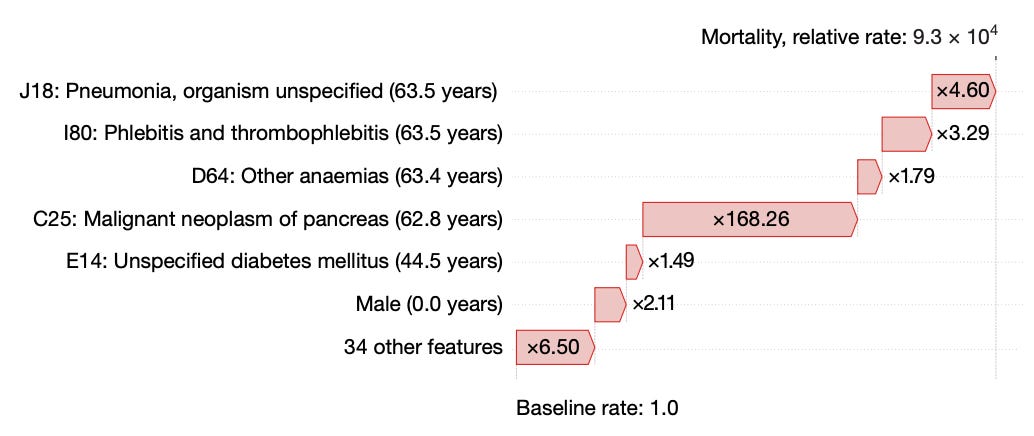

The reality is far more complicated, naturally, and the study itself is primarily of academic, not practical, interest. Effectively, the authors broke down patients in the UK biobank into medical event tokens. In language models, one token naturally follows another to create a sequence of words. In this instance, the medical event tokens beget future medical events, and thus disease progression. In some aspects, this works very sensibly. For example, illustrated by inspection of this breakdown of the strongest features associated with mortality in 63.5 year olds – a progression of tokens dominated by pancreatic cancer and its subsequent complications:

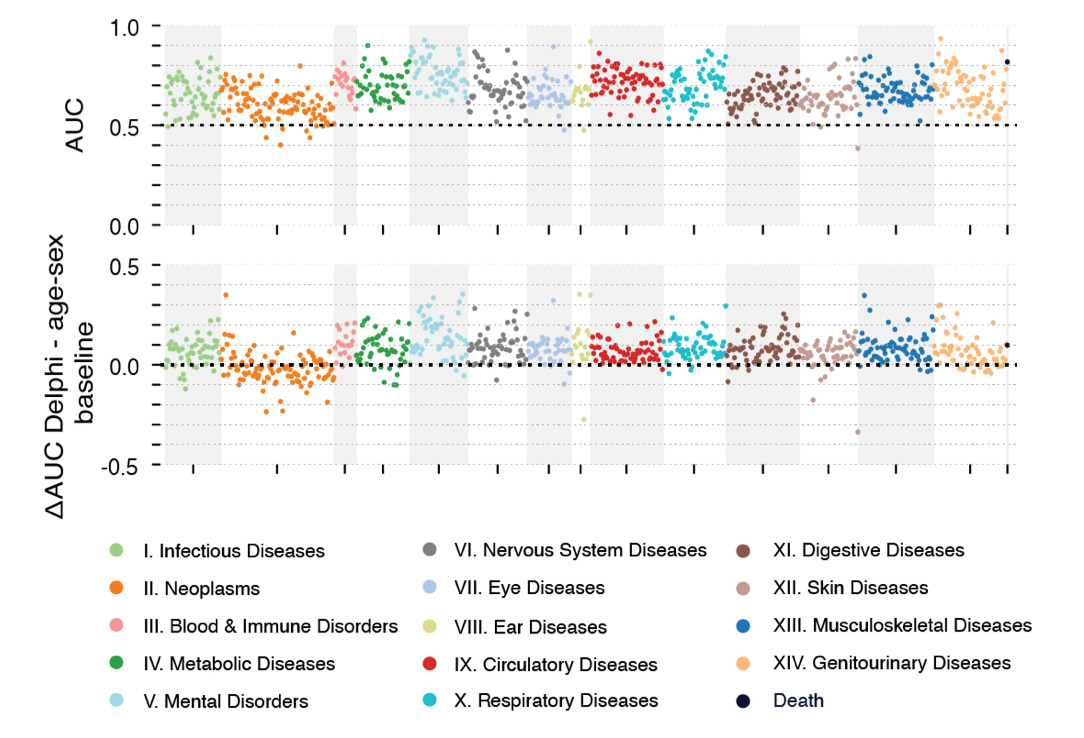

However, on the whole, these are not profoundly accurate and usable predictions. The AUCs for accurate prediction of various types of conditions are usually around that 0.5 to 0.7 range. It is a little bit better, overall, than just baseline risk from age and sex, but only enough to illustrate the novelty of their method, rather than to use as a crystal ball:

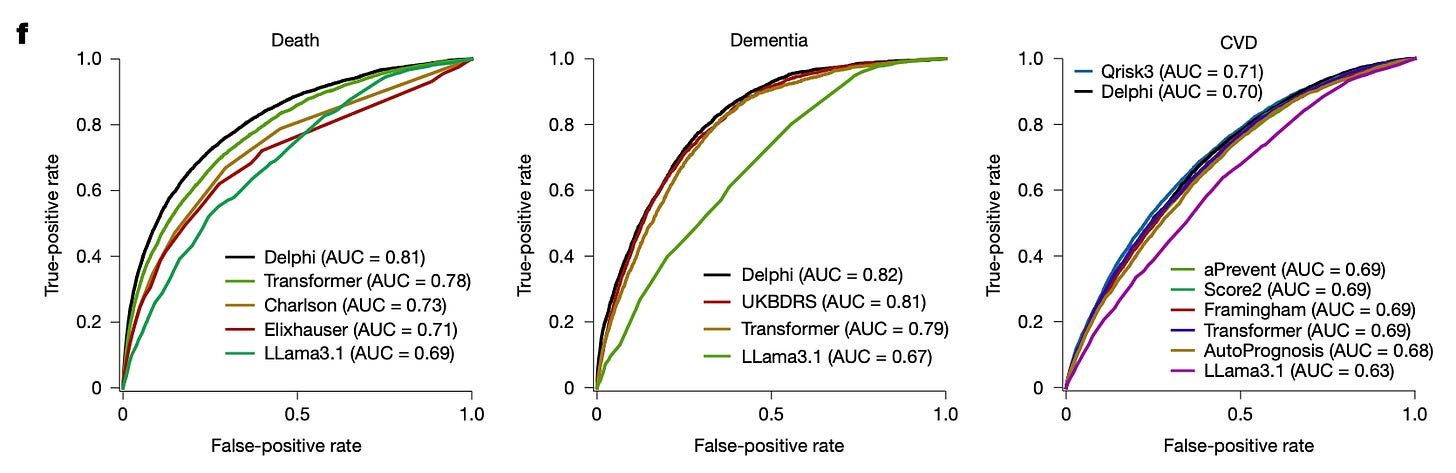

There are also some little comparisons in the article to other prediction instruments or tools, and – without any special tuning – this general predictive model achieves similar performance to specialized risk-prediction models. For example, this model was better at predicting death from Charlson or Elixhauser instruments, and effectively the same for cardiovascular risk as Qrisk3, aPrevent, and Framingham.

Overall, it's certainly an interesting demonstration of transformer use on a large data set. Clearly, though, the future remains unwritten.