The Predictive Model Vendor Lies

Performance doesn't match up to the promises.

This one of those “your mileage may vary” articles in which <gasp> a product did not live up to its expectations.

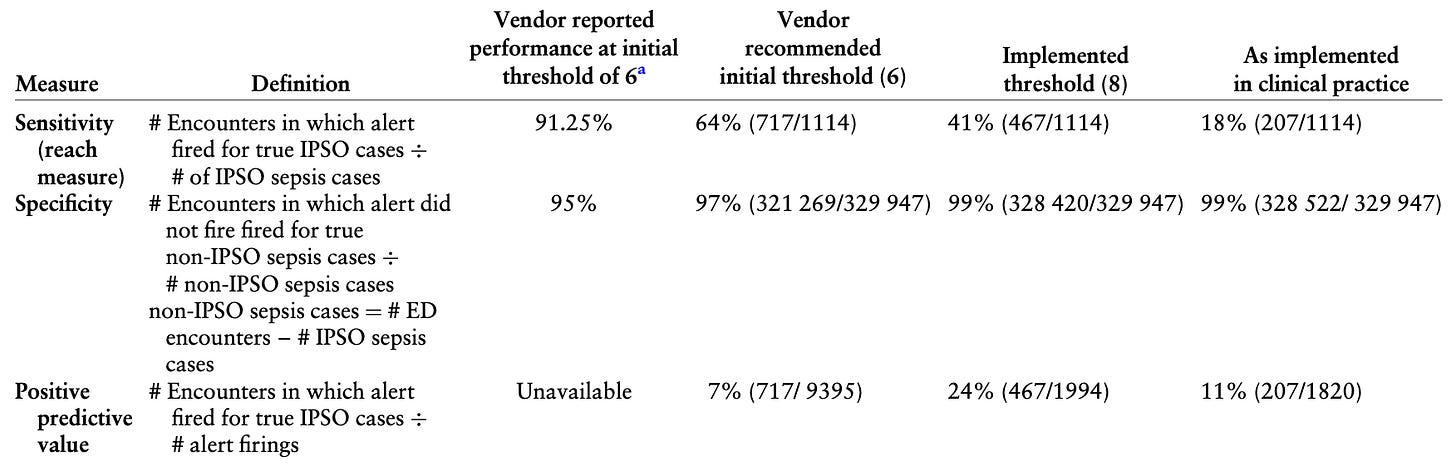

Specifically, these authors are highlighting their experience with the Epic Systems pediatric sepsis module, based off a model derived from a data set of encounters from Nationwide Children’s Hospital. The derivation promised a certain level of performance – and that was not met. As seen in the table below, the authors tested model performance at the vendor-recommended sepsis risk score threshold, a higher score threshold, and then further tweaked the threshold to focus primarily on picking up hypothermic young children:

Even sacrificing sensitivity for specificity still resulted in dismal PPV.

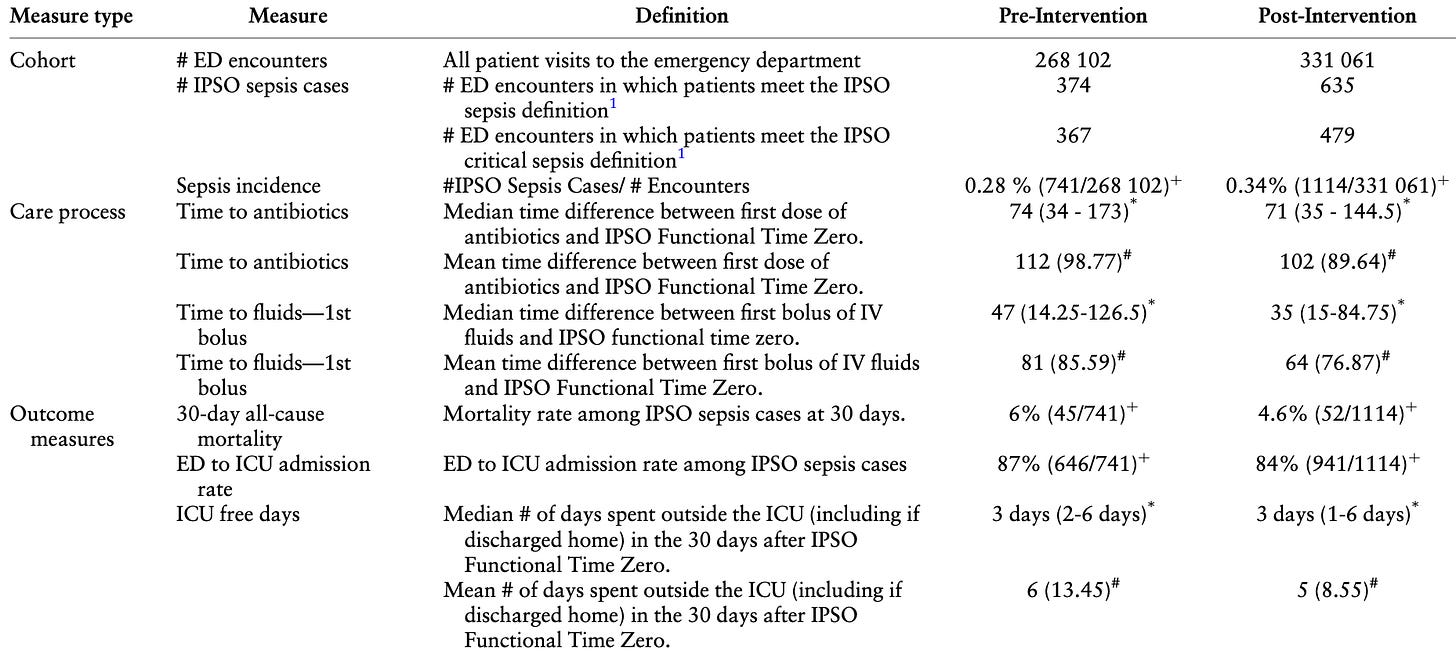

However, there’s another component: pre- and post-implementation outcomes:

As you can see, there are – effectively – trivial differences of a few minutes for antibiotic and fluid administration. There are small differences in patient-oriented outcomes, but those all shake out from the increased rate of identification of “sepsis”, roping in an excess of non-critical sepsis cases. This is very typical of these sorts of EHR interventions demonstrating “improved outcomes” based on extra, mild, “sepsis” cases flowing from the circular intention-to-treat study definitions of sepsis.

Yet another study to toss on the pile of downstream consequences of our mandated pursuit of “quality”.