The Sensitivity of Head Injury Biomarkers Is Not 100%

An artifact of statistical chicanery gone rogue.

To those who have been following the progression in evidence for head-injury biomarkers over time, it is a Known Fact these are imperfect tools. The combination of GFAP and UCH-L1 is very sensitive but profoundly non-specific. The sensitivity, established in such trials as leading to FDA-approval of the biomarker platform, seems to be in the range of 96-98%. Due to this limitation, the FDA approval requires these biomarkers not be used as a stand-alone device, but as an adjunct to other clinical information – e.g., clinical risk-stratification tools.

So, what is happening here?

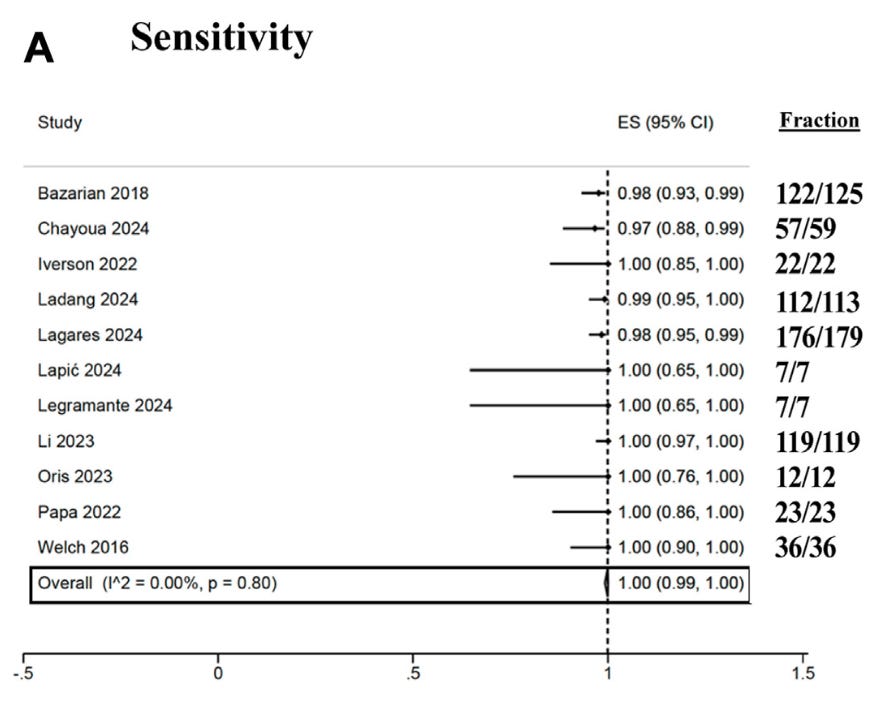

This recently published meta-analysis has pooled 11 studies looking at GFAP and UCH-L1 and, as you can see above, in the authors’ own words: “In summary, the combined use of GFAP and UCH-L1 demonstrates a sensitivity of 100% in ruling out intracranial injury in adults following mTBI”. In common parlance, 100% sensitivity would imply zero false negatives – with a small amount of imprecision relating to the 95% confidence interval extending down to 99%, as above.

But, at the same time – it is obvious from the data shown to be pooled, the biomarker combination misses multiple cases of head injury. In fact, if these data are summed up, the 9 missed cases of ICH give you a naive patient-level proportion of 98.6%. How on earth can this be sold as “100% sensitivity”?

Lies, damned lies, and statistics, as they say. A meta-analysis is not a simple summing of patient-level proportions, but a study-level synthesis. As part of that study-level synthesis, meta-analytic methods can include such things as variance-stabilizing transformations, techniques used to smooth out the differences between studies of different sizes. As part of the metaprop package within Stata, a variance-stabilizing transformation called Freeman-Tukey is used, which has an unintended effect of quashing all the study proportions up against the boundary. Thus, the few studies with sensitivity in the ~97-98% range are all transformed close to 1, pooled, and then back-transformed – giving the statistical result you see above.

This is obviously an unintuitive result – and why some statisticians have called out the use of F-T transformations as providing grossly misleading results when pooling studies with a narrow range of sensitivities up against the boundary. The simple work-around is to use a generalized linear mixed model (GLMM) – and that gives us a pooled sensitivity of ~98.6% (95% CI 97% to 99%) depending on whether fixed-effects or random-effects models are used. This is the appropriate window into these pooled data, not the 100% sensitivity unreservedly endorsed by the authors.

Is a ~1 in 70 miss rate for intracranial hemorrhage OK for a biomarker? It might be, if it were specific enough to dramatically reduce CT use – but specificity sits somewhere in the mid 20-30% range:

This means the vast majority of positive results will be false positives, and any indication creep beyond the population otherwise appropriate for a head CT will result in increased CT use, rather than a decrease as hoped.

Finally, this meta-analysis suffers from the unavoidable universal issue of obfuscation of the procedures and peculiarities of each individual study. Many of the included studies were run by the manufacturers of the assays themselves. Many of the studies used frozen and spun plasma samples analyzed at a central laboratory, rather than using whole-blood on the point-of-care platform being popularized in modern times. These all reduce the trustworthiness and generalizability of these observations to current clinical practice, as well.

So, summed bluntly: this analysis does not mean head injury biomarkers are “100% sensitive” and immune to false negatives, despite the authors’ fevered embrace of this result.