Yes, Tell Patients If You've Used AI

It doesn't matter if *you* think it matters.

This article puts a call out with a pretty simple message: if you’ve used AI, tell your patients.

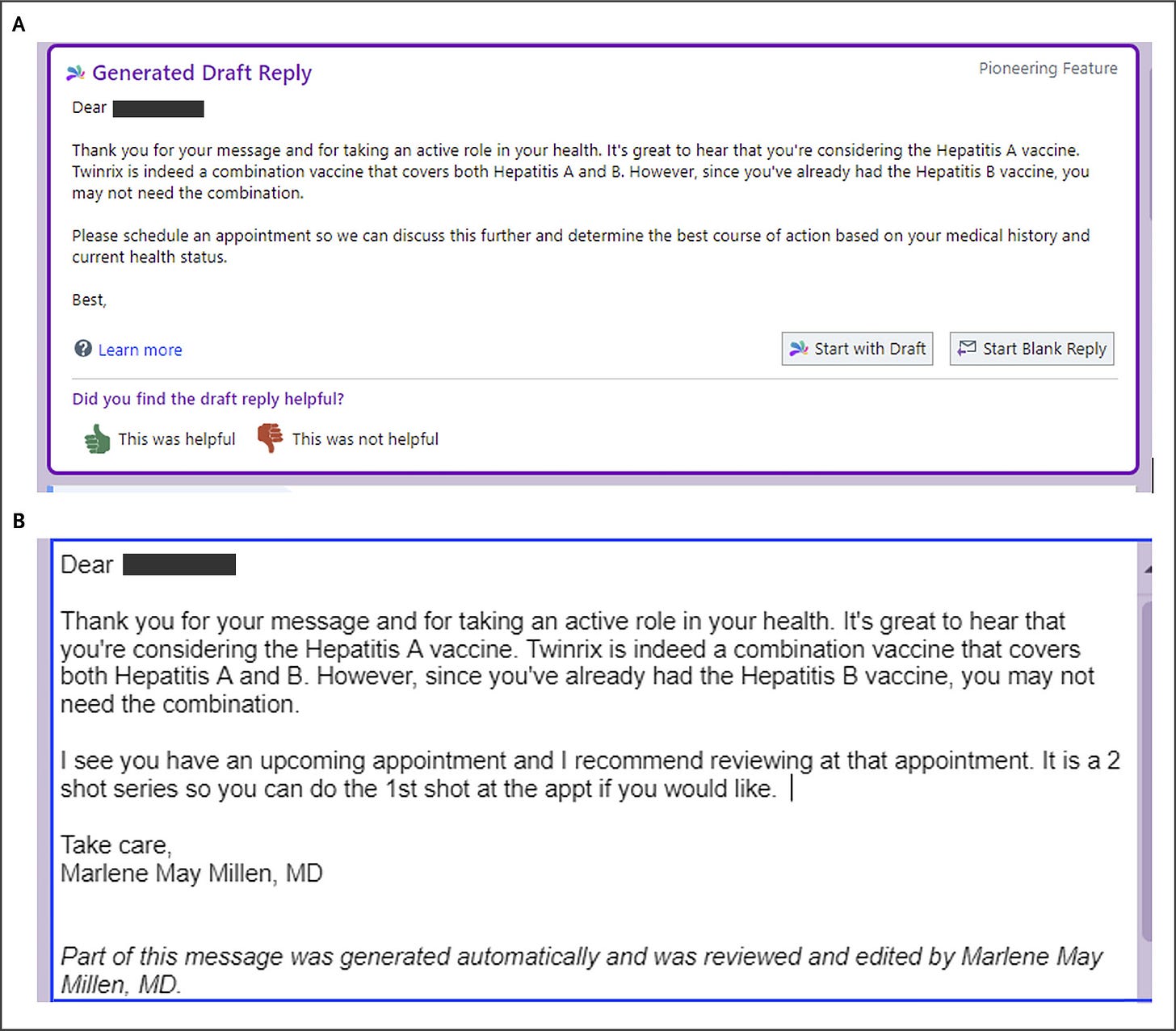

The authors from UCSD use AI-drafted patient reply messages as an example, with their interface (Epic, as usual) and accompanying text shown here:

There is discussion in their manuscript regarding how they chose this specific language, as well as a brief overview of some relevant ethical considerations.

Obviously, transparency is best-practice in medicine. With increasing task and cognitive automation, both physicians and patients are still learning how to communicate and discuss these issues. Whether it is AI transcription for scribing, AI-augmented decision-making, or AI drafted replies, patients ought to be informed and provide consent as best as feasible.

It also ought to go without saying, with LLM-based tools still producing inaccuracies and important omissions, informing patients helps them be part of the team preventing downstream harms from errant AI.